How a no-code tool revolutionized our ETL process

April 26

Have you ever felt insecure about something and then relieved that you gave it a shot as soon as you try it? Today’s article will focus on precisely one such tool that brought this series of feelings to me. We’re going to talk about HevoData, a no-code pipelining tool that we used in one of our projects.

The problem

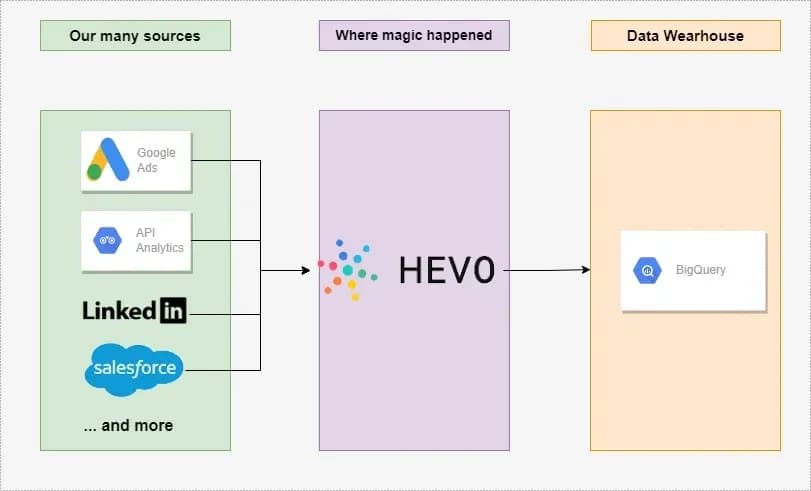

Let’s start at the beginning. When the client approached us, they had their data distributed across all the platforms that they were using to get leads as well as salesforce, WebEngage and other internal tools. Additionally, we were also going to need data from various ad platforms such as Facebook, LinkedIn and Google Ads.

Our first priority was to bring the data to one location and make the warehouse for reporting. For this, we selected Google BigQuery as our destination of choice. The first platform we decided to tackle was WebEngage, a platform that depended on webhooks to get data out of the system. For this, we decided to go by the conventional method of writing our own code and deploying it using google cloud functions. It worked very well for us since there were a lot of customizations we required however they took more time than we expected.

When we moved to the next platform, that is Google Analytics and tried the same approach, we realized that this was not going to work as there was a certain limit on how many dimensions and metrics we could pull out with each function and writing custom code for the kind of data we wanted was going to be time-consuming. That is when we came across HevoData.

Enter Hevo Data

After the initial deliberation about heavy dependence on a platform, we decided to take a leap of faith and realised that a no-code data pipeline is a lifesaver for every data professional. It gave us a smooth way to integrate data from all sources, clean it, transform it and load it to the destination of choice all without a single line of code and any time spent on making connectors work. Moreover, Hevo did all of this at an economical cost for more than 100 sources and destinations while freeing up a lot of engineering hours. Not only this, but it also significantly increased the collaboration between the business and the technology teams as it was easier for the business to understand Hevo’s simple user interface that graphically represented the flow of the data. Our first pipeline with Hevo was up and running in just a few minutes. All we had to do was choose the source, authorize it and choose the destination. That’s it, 3 clicks and we were up and running. Not only this, but Hevo also has a historic load feature which allowed us to get all our data from the last 12 months so that analytics could start immediately.

Mapping and Warehousing

When it comes to warehousing and selecting the destination, there are two features that we loved the most, auto mapping and a managed warehouse. Auto mapping uses an internal engine by Hevo that automatically detects the schema of the source data and creates an appropriate destination table, thus avoiding the effort of manual schema creation. Not only this, when we had a few changes in our source data, it automatically detected them and updated the schema of the destination as well. To increase the simplicity further, Hevo also provides a fully managed BigQuery based warehouse which allows you to store, manipulate, query and analyze huge amounts of data without having to spend time configuring or maintaining the warehouse. This is also easily connected to our favourite BI tools from right within the Hevo UI.

Transformations

Transformations as well have been quick and efficient with this tool. It not only allowed us to transform and enrich unstructured and structured data through a drag and drop UI but also allowed us to accomplish slightly more complex transformations through a Python coding interface that our data engineers fell in love with immediately.

Reverse ETL

Another useful feature that Hevo provides is Reverse ETL which helps users in taking the data from a warehouse to various CMS systems. While we have not explored this yet, it does look interesting and we will surely use it for some other use case.

Conclusion

The simple and crisp documentation ensured that for the most part, we did not need to get in touch with the support team at Hevo, but in the rare case when we did need to reach out to them, they were promptly able to resolve whatever snag we hit.

In conclusion, while this might sound like a paid promotion, it is not. It is a genuine appreciation article for a tool that has been an economic and time-saving tool for the data team here at Tunicalabs Media Pvt. Ltd. and we definitely see ourselves using it for our future projects.

- Quick Links

- Homepage

- Projects

- About Us

- What we do

- Careers

- Services

- Product Dev

- MarTech

- Data Analytics

- Our Products

- TrakNeo

- pURL

- Breathe India

- Arivu

- LIFI